Are Getters and Setters the correct way to think of abstraction? What are pro and cons of OOP and Procedural programming? And, in the OOP world, how can you define objects?

Table of Contents

Just a second! 🫷

If you are here, it means that you are a software developer.

So, you know that storage, networking, and domain management have a cost .If you want to support this blog, please ensure that you have disabled the adblocker for this site.

I configured Google AdSense to show as few ADS as possible – I don’t want to bother you with lots of ads, but I still need to add some to pay for the resources for my site.Thank you for your understanding.

– Davide

This is the third part of my series of tips about clean code.

Here’s the list (in progress)

- names and function arguments

- comments and formatting

- abstraction and objects

- error handling

- tests

In this article, I’m going to explain how to define classes in order to make your code extensible, more readable and easier to understand. In particular, I’m going to explain how to use effectively Abstraction, what’s the difference between pure OOP and Procedural programming, and how the Law of Demeter can help you structure your code.

The real meaning of abstraction

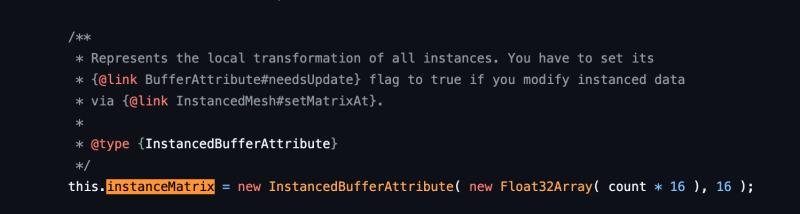

Some people think that abstraction is nothing but adding Getters and Setters to class properties, in order to (if necessary) manipulate the data before setting or retrieving it:

interface IMixer_A

{

void SetVolume(int value);

int GetVolume();

int GetMaxVolume();

}

class Mixer_A : IMixer_A

{

private const int MAX_VOLUME = 100;

private int _volume = 0;

void SetVolume(int value) { _volume = value; }

int GetVolume() { return _volume; }

int GetMaxVolume() { return MAX_VOLUME; }

}

This way of structuring the class does not hide the implementation details, because any client that interacts with the Mixer knows that internally it works with integer values. A client should only know about the operations that can be performed on a Mixer.

Let’s see a better definition for an IMixer interface:

interface IMixer_B

{

void IncreaseVolume();

void DecreaseVolume();

void Mute();

void SetToMaxVolume();

}

class Mixer_B : IMixer_B

{

private const int MAX_VOLUME = 100;

private int _volume = 0;

void IncreaseVolume()

{

if (_volume < MAX_VOLUME) _volume++;

}

void DecreaseVolume()

{

if (_volume > 0) _volume--;

}

void Mute() { _volume = 0; }

void SetToMaxVolume()

{

_volume = MAX_VOLUME;

}

}

With this version, we can perform all the available operations without knowing the internal details of the Mixer. Some advantages?

- We can change the internal type for the

_volumefield, and store it as aushortor afloat, and change the other methods accordingly. And no one else will know it! - We can add more methods, for instance a

SetVolumeToPercentage(float percentage)without the risk of affecting the exposed methods - We can perform additional checks and validation before performing the internal operations

It can help you of thinking classes as if they were real objects you can interact: if you have a stereo you won’t set manually the volume inside its circuit, but you’ll press a button that increases the volume and performs all the operations for you. At the same time, the volume value you see on the display is a “human” representation of the internal state, not the real value.

Procedural vs OOP

Object-oriented programming works the best if you expose behaviors so that any client won’t have to access any internal properties.

Have a look at this statement from Wikipedia:

The focus of procedural programming is to break down a programming task into a collection of variables, data structures, and subroutines, whereas in object-oriented programming it is to break down a programming task into objects that expose behavior (methods) and data (members or attributes) using interfaces. The most important distinction is that while procedural programming uses procedures to operate on data structures, object-oriented programming bundles the two together, so an “object”, which is an instance of a class, operates on its “own” data structure.

To see the difference between OO and Procedural programming, let’s write the same functionality in two different ways. In this simple program, I’m going to generate the <a> tag for content coming from different sources: Twitter and YouTube.

Procedural programming

public class IContent

{

public string Url { get; set; }

}

class Tweet : IContent

{

public string Author { get; set; }

}

class YouTubeVideo : IContent

{

public int ChannelName { get; set; }

}

Nice and easy: the classes don’t expose any behavior, but only their properties. So, a client class (I’ll call it LinkCreator) will use their properties to generate the HTML tag.

public static class LinkCreator

{

public static string CreateAnchorTag(IContent content)

{

switch (content)

{

case Tweet tweet: return $"<a href=\"{tweet.Url}\"> A post by {tweet.Author}</a>";

case YouTubeVideo yt: return $"<a href=\"{yt.Url}\"> A video by {yt.ChannelName}</a>";

default: return "";

}

}

}

We can notice that the Tweet and YouTubeVideo classes are really minimal, so they’re easy to read.

But there are some downsides:

- By only looking at the

IContentclasses, we don’t know what kind of operations the client can perform on them. - If we add a new class that inherits from

IContentwe must implement the operations that are already in place in every client. If we forget about it, theCreateAnchorTagmethod will return an empty string. - If we change the type of URL (it becomes a relative URL or an object of type

System.Uri) we must update all the methods that reference that field to propagate the change.

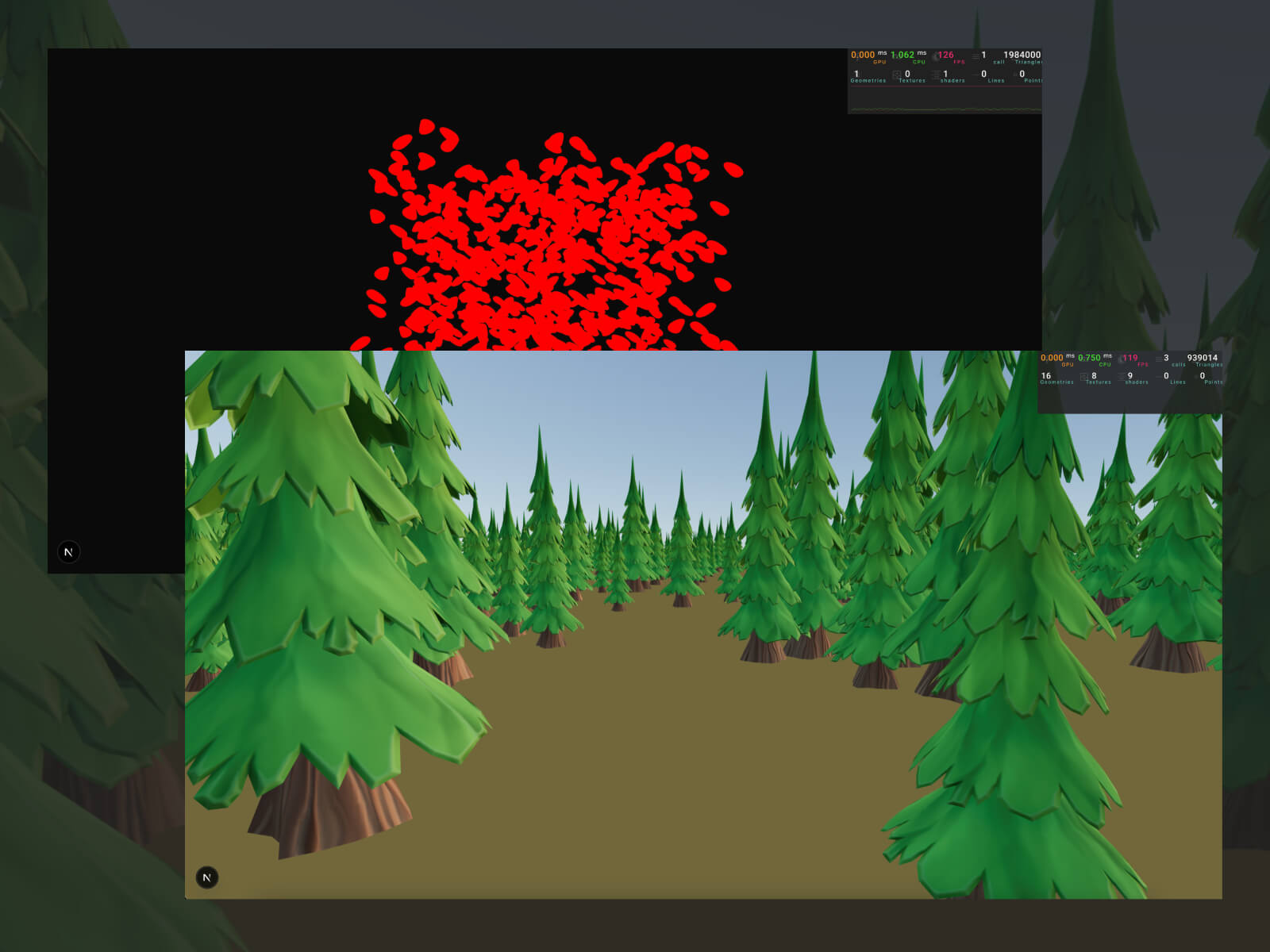

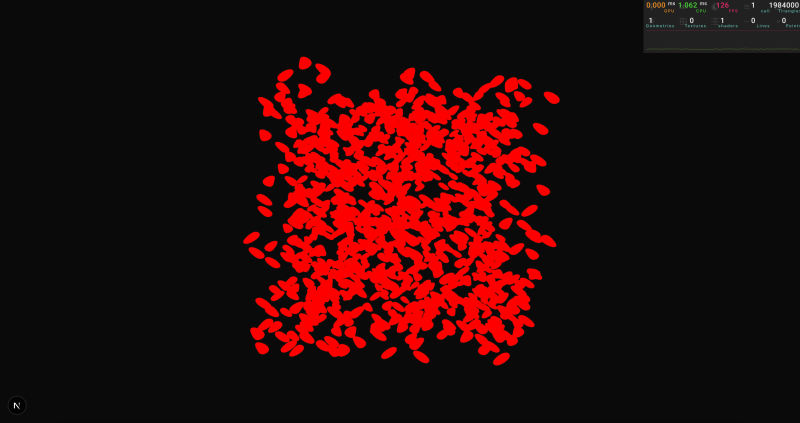

❗Procedural or OOP? 1️⃣/2️⃣

With Procedural all the operations are managed by LinkCreator.

PRO: you can add new functions to LinkCreator without affecting the Content subclasses.

CONS: when you add a new Content type, you must implement its methods in LinkCreator#cleancode pic.twitter.com/q8eHSZbUDD

— Davide Bellone | Code4it.dev | Microsoft MVP (@BelloneDavide) August 20, 2020

Object-oriented programming

In Object-oriented programming, we declare the functionalities to expose and we implement them directly within the class:

public interface IContent

{

string CreateAnchorTag();

}

public class Tweet : IContent

{

public string Url { get; }

public string Author { get; }

public string CreateAnchorTag()

{

return $"<a href=\"{Url}\"> A post by {Author}</a>";

}

}

public class YouTubeVideo : IContent

{

public string Url { get; }

public int ChannelName { get; }

public string CreateAnchorTag()

{

return $"<a href=\"{Url}\"> A video by {ChannelName}</a>";

}

}

We can see that the classes are more voluminous, but just by looking at a single class, we can see what functionalities they expose and how.

So, the LinkCreator class will be simplified, since it hasn’t to worry about the implementations:

public static class LinkCreator

{

public static string CreateAnchorTag(IContent content)

{

return content.CreateAnchorTag();

}

}

But even here there are some downsides:

- If we add a new

IContenttype, we must implement every method explicitly (or, at least, leave a dummy implementation) - If we expose a new method on

IContent, we must implement it in every subclass, even when it’s not required (should I care about the total video duration for a Twitter channel? Of course no). - It’s harder to create easy-to-maintain classes hierarchies

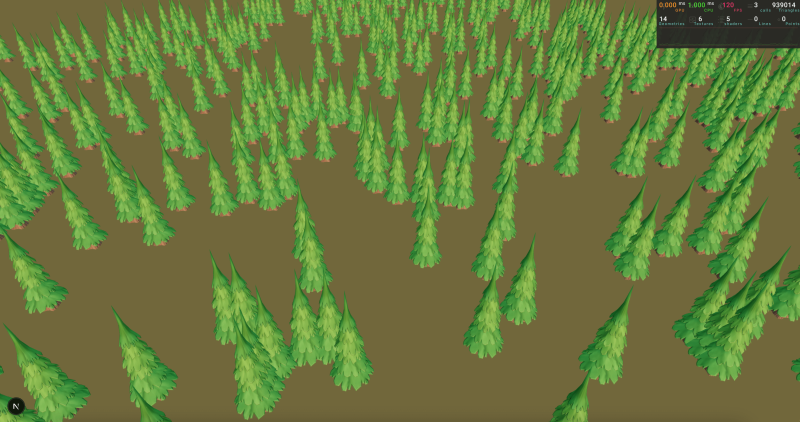

❗Procedural or OOP? 2️⃣/2️⃣

With OOP & polymorphism, each class implements its methods knowing the internal details of itself.

PRO: it’s easy to add new Content classes w/o affecting the siblings

CONS: if you need to expose a new method, you need to add it to all the siblings

— Davide Bellone | Code4it.dev | Microsoft MVP (@BelloneDavide) August 20, 2020

So what?

Luckily we don’t live in a world in black and white, but there are other shades: it’s highly unlikely that you’ll use pure OO programming or pure procedural programming.

So, don’t stick too much to the theory, but use whatever fits best to your project and yourself.

Understand Pro and Cons of each type, and apply them wherever is needed.

Objects vs Data structure – according to Uncle Bob

❓

“Objects hide

their data behind abstractions and expose functions that operate on that data. Data structure

expose their data and have no meaningful functions”For me, a data structure (eg: linked list) exposes the operations, not the internals.

What do you think?#cleancode— Davide Bellone | Code4it.dev | Microsoft MVP (@BelloneDavide) August 22, 2020

There’s a statement by the author that is the starting point of all his following considerations:

Objects hide their data behind abstractions and expose functions that operate on that data. Data structure expose their data and have no meaningful functions.

Personally, I disagree with him. For me it’s the opposite: think of a linked list.

A linked list is a data structure consisting of a collection of nodes linked together to form a sequence. You can perform some operations, such as insertBefore, insertAfter, removeBefore and so on. But they expose only the operations, not the internal: you won’t know if internally it is built with an array, a list, or some other structures.

interface ILinkedList

{

Node[] GetList();

void InsertBefore(Node node);

void InsertAfter(Node node);

void DeleteBefore(Node node);

void DeleteAfter(Node node);

}

On the contrary, a simple class used just as DTO or as View Model creates objects, not data structures.

class Person

{

public String FirstName { get; set; }

public String LastName { get; set; }

public DateTime BirthDate { get; set; }

}

Regardless of the names, it’s important to know when one type is preferred instead of the other. Ideally, you should not allow the same class to expose both properties and methods, like this one:

class Person

{

public String FirstName { get; set; }

public String LastName { get; set; }

public DateTime BirthDate { get; set; }

public string CalculateSlug()

{

return FirstName.ToLower() + "-" + LastName.ToLower() + "-" + BirthDate.ToString("yyyyMMdd");

}

}

An idea to avoid this kind of hybrid is to have a different class which manipulates the Person class:

static class PersonAttributesManager

{

static string CalculateSlug(Person p)

{

return p.FirstName.ToLower() + "-" + p.LastName.ToLower() + "-" + p.BirthDate.ToString("yyyyMMdd");

}

}

In this way, we decouple the properties of a pure Person and the possible properties that a specific client may need from that class.

The Law of Demeter

⁉the Law of Demeter says that «a module should not know about the innard of the things it manipulates»

In the bad example the Client “knows” that an Item exposes a GetSerialNumber. It’s a kind of “second-level knowledge”.

A thread 🧵 – #cleancode https://t.co/PfygNCpZGr pic.twitter.com/1ovKnasBNX

— Davide Bellone | Code4it.dev | Microsoft MVP (@BelloneDavide) August 30, 2020

The Law of Demeter is a programming law that says that a module should only talk to its friends, not to strangers. What does it mean?

Say that you have a MyClass class that contains a MyFunction class, which can accept some arguments. The Law of Demeter says that MyFunction should only call the methods of

MyClassitself- a thing created within

MyFunction - every thing passed as a parameter to

MyFunction - every thing stored within the current instance of

MyClass

This is strictly related to the fact that things (objects or data structures – it depends if you agree with the Author’s definitions or not) should not expose their internals, but only the operations on them.

Here’s an example of what not to do:

class LinkedListClient{

ILinkedList linkedList;

public void AddTopic(Node nd){

// do something

linkedList.NodesList.Next = nd;

// do something else

}

}

What happens if the implementation changes or you find a bug on it? You should update everything. Also, you are coupling too much the two classes.

A problem with this rule is that you should not refer the most common operations on base types too:

class LinkedListClient{

ILinkedList linkedList;

public int GetCount(){

return linkedList.GetTopicsList().Count();

}

}

Here, the GetCount method is against the Law of Demeter, because it is performing operations on the array type returned by GetList. To solve this problem, you have to add the GetCount() method to the ILinkedList class and call this method on the client.

When it’s a single method, it’s acceptable. What about operations on strings or dates?

Take the Person class. If we exposed the BirthDate properties as a method (something like GetBirthDate), we could do something like

class PersonExample{

void DoSomething(Person person){

var a = person.GetBirthDate().ToString("yyyy-MM-dd");

var b = person.GetBirthDate().AddDays(52);

}

}

which is perfectly reasonable. But it violates the law of Demeter: you can’t perform ToString and AddDays here, because you’re not using only methods exposed by the Person class, but also those exposed by DateTime.

A solution could be to add new methods to the Person class to handle these operations; of course, it would make the class bigger and less readable.

Therefore, I think that this law of Demeter is a good rule of thumb, but you should consider it only as a suggestion and not as a strict rule.

If you want to read more, you can refer to this article by Carlos Caballero or to this one by Robert Brautigam.

Wrapping up

We’ve seen that it’s not so easy to define which behaviors a class should expose. Do we need pure data or objects with a behavior? And how can abstraction help us hiding the internals of a class?

Also, we’ve seen that it’s perfectly fine to not stick to OOP principles strictly, because that’s a way of programming that can’t always be applied to our projects and to our processes.

Happy coding!